We see and hear about artificial intelligence (AI) and machine learning (ML) everywhere these days. Some people claim that it will solve almost all our problems, just like the 5G hype a few years ago. Opinions range from the scariest invention in history to the most advanced technological advance imaginable, and many think it's a good thing, but there are plenty of concerns and caveats.

No matter what you think about the promise or threat of AI, one thing is indisputable: the implementation of AI requires a lot of computing power and electricity. The larger popular model, GPT-3, for example, has 175 billion parameters, or weights. There are so many types of associations and computations on so many dimensions that working through AI algorithms is a significant investment.

This problem is well understood, and one of the feasible ways to overcome it is simulation computing. It certainly had a "Back To The Future" feel to it, as early electronic computers were all analog, using vacuum tube amplifiers, then discrete transistors, and finally operational amplifier ics (op AMPs).

Analog circuits are not limited to large-scale computing needs. Even a basic function such as multiplication can be done with several active devices, and this is usually the most cost-effective and lowest power consumption method. For example, the AD534 analog multiplier, designed by Barrie Gilbert of Analog Devices in 1976, which uses an ingenious arrangement of transistors (Figure 1), is still sold today. It can provide continuous, real-time output proportional to instantaneous power (voltage times current).

Figure 1: The AD534 analog multiplier takes two voltage inputs and provides a concurrent analog voltage output that is proportional to the product with almost no time lag.

Higher-bandwidth analog multipliers can be used for RF signal chains to determine root mean square (rms) voltages for complex waveforms. This single-chip analog computing can reach speeds of thousands of megahertz, one to two orders of magnitude faster than what an oversampled digital system can support.

Simulation meets artificial intelligence

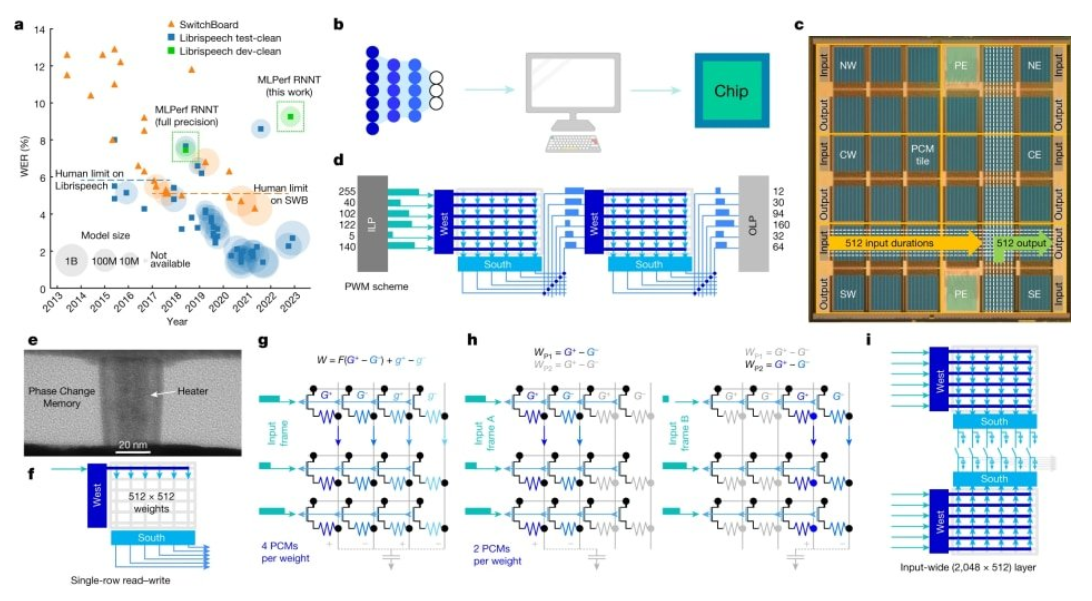

Simulation AI is a different type of simulation topology. Among the many vendors working on "analog AI," IBM has developed computing cores that use phase-change memory (PCM), not to be confused with pulse code modulation (see Figure 2).

Figure 2: Overview of the construction and layout of phase-change material based simulation methods

In this design, an electrical pulse is applied to the material, which changes the conductivity of the device. The material will switch between amorphous and crystalline states, a small electrical pulse will make the device more crystalline, thus providing a small resistance, and a large enough electrical pulse will make the device amorphous, resulting in a large resistance.

This PCM device records its state as a continuous value between the amorphous and crystalline states called a synaptic weight. This value can be stored in the physical atomic configuration of each device, thus encoding the weight of the neural network directly onto the physical chip. This memory is non-volatile, so when the power is turned off, the weight remains.

The IBM research team's design can encode 35 million phase-change memory devices per chip, can be used in models with up to 17 million parameters, and is optimized for the product accumulation (MAC) operations that dominate deep learning computing. By reading the rows of a resistive memory (NVM) array and then collecting the current along the columns, the IBM team made it possible to perform a MAC inside the memory.

This eliminates the need to move weights between or across the chip's memory and compute areas. Analog chips perform many MAC operations in parallel, saving time and energy. The IBM team conducted various AI tests, and the results showed that their prototype's performance per watt (a measure of efficiency) was about 14 times better than comparable systems.

Other simulated artificial intelligence solutions

IBM is not the only organization looking to use simulation techniques to reduce the need for AI. A team at MIT has designed a practical inorganic resistance material they call a proton-programmable resistor,

The conductance is controlled by the motion of the proton. In this method, the mechanism of operation of the device is based on the electrochemical process of inserting the smallest ion (proton) into the insulating oxide to regulate its electronic conductivity. This requires the use of an electrolyte similar to a battery, which conducts protons but blocks electrons. To increase the conductivity, more protons can be pushed into the channel in the resistor, while protons can be removed to reduce the conductivity (Figure 3).

Figure 3: The voltage correlation of conductance modulation in the AI topology is based on an electrochemical method of inserting a proton into an insulating oxide to modulate its electronic conductivity

Because they are using very thin devices, they can accelerate the movement of such ions by using a strong electric field and push these ion devices into a nanosecond operating state. They applied a voltage of up to 10V to a special, solid-glass film at the nanoscale thickness that can conduct protons without permanently damaging it, and the stronger the electric field, the faster the ionic device.

Other companies are also pursuing ways to simulate AI. Mythic integrates flash arrays and ADCs, which in combination can store model parameters and perform low-power, high-performance matrix multiplications through Mythic analog matrix processors.

The company's single-chip M1076 AMP Mythic Analog Computing Engine (Mythic ACE) delivers the computing power of a GPU at one-tenth the power consumption. It integrates flash arrays with Dacs and ADCs to jointly store model parameters and perform low-power, high-performance matrix multiplication (Figure 4).

Figure 4: This method integrates an array of flash elements used as tunable resistors with Dacs and ADCs, which together store model parameters and perform low-power, high-performance matrix multiplication

By taking the in-memory calculation method to the extreme, it computes directly inside the memory array itself. It uses the memory element as a tunable resistor, providing the input in the form of voltage and collecting the output in the form of current. In other words, it uses analog computation for core neural network matrix operations, i.e. multiplying the input vector by the weight matrix.

Based on these research and semi-commercial behavior efforts, it seems that the concept and approach of simulation may be the key to overcoming the challenges now faced by digital, computational, and power-intensive AI systems.

About US

Heisener Electronic is a famous international One Stop Purchasing Service Provider of Electronic Components. Based on the concept of Customer-orientation and Innovation, a good process control system, professional management team, advanced inventory management technology, we can provide one-stop electronic component supporting services that Heisener is the preferred partner for all the enterprises and research institutions.